Agentic AI Systems' Potential in Upstream Oil & Gas

Buzzword or Not?

In the past few months I keep seeing more and more business proposals with “Agentic AI” as their core offering. Initially, I was under the impression that this is a consulting firm buzzword. But apparently not! It's a serious and evolving technical paradigm grounded in current research across artificial intelligence, particularly where autonomy, planning, and interaction are required. Based on what I understand so far, it is in particular technically relevant to AI systems that autonomous action towards a defined goal is needed, this goal could only be achieved in multi interdependent steps, and continual learning and correction is needed through memory and feedback mechanisms. This is in contrast to tools like ChatGPT, or Foundation Models, being used in isolation, and Agentic AI is supposed to enable pipeline style automation that depend on decision-making to accomplish tasks. Agentic systems are built on a combination, of LLMs, multimodal models, memory architectures, and orchestration logic, or a compound agentic system, and are increasing in real world practices.

It initially sounded like a buzzword to me because it's being frequently used in vendor pitches, consulting presentations, and market-facing strategy decks, without always grounding it in the engineering and modeling work required to do it well. The word “agent” is easy to throw around. But actual Agentic AI requires environment modeling, feedback handling, planning logic, and domain-specific memory grounding. So it's valid, but it can be abused, like "digital twin" or "AI-driven transformation" are used in irrelevant context most of the time. I think presentations with Agentic AI should be taken seriously if:

The problem space is dealing with complex workflows, multi-modal data, and decision loops

The goal is reliable autonomy

You can define goals, tools, memory, and constraints

Otherwise, if some startup presents Agentic AI as their core offering, there is a good chance you’d end up with a chatbot and a nice UI.

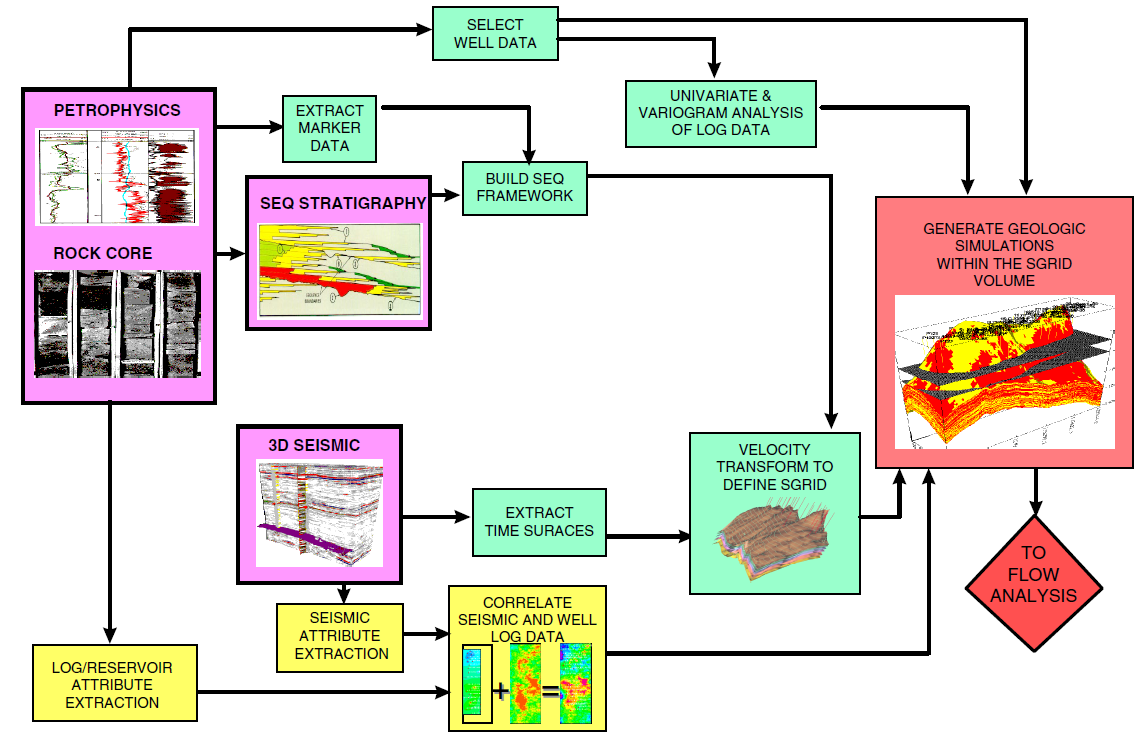

The upstream oil & gas domain presents an ideal candidate for Agentic AI because of its complex data ecosystems, high-value decision cycles, and inherently multidisciplinary workflows. I use the example of an integrated asset management and upstream operations to show how the companies rely on the continuous integration of seismic interpretation, log analysis, fluid characterization, production monitoring, and economic evaluation, each with its own data types, spatial and temporal resolution, and domain-specific tooling. These workflows are data-intensive, decision-centric, iterative, and sensitive to uncertainty. This level of complexity aligns directly with the capabilities of Agentic AI systems, which can operate across modalities, reason over interdependent processes, adapt to changing data states, and interact with both humans and tools in context. One of the most common practices for petroleum engineers is to establish a “geomodel”. This geomodel is then used by tens of experts to make various types of decisions about drilling, production forecasting, injection, etc. But the annoying part is that every few months when new data from producing wells or recent drills are gathered this geomodel has to be updated.

The tasks I mention here are challenging and time-consuming, but the bright side is that the industry has established workflows that have been developed and refined over decades. By layering Agentic AI over these established workflows, like physics-based reservoir simulation, we can create the potential for intelligent coordination, automated interpretation, and dynamic scenario evaluation. Now, the promise of Agentic AI for upstream oil and gas might resemble some of the existing simulation software packages offered by the service companies. For an Agentic AI approach to gain a momentum among oil and gas experts or business decision-makers, a clear technical distinction between Agentic AI systems and conventional E&P simulation platforms like SLB Petrel or Delfi should be established.

Simulation packages automate known workflows through GUIs, scripts, macros, and plugins. These software follow human-defined process trees and require numerous manual intervention at phase transitions, history-matching, calibration, etc. For an Agentic AI approach, the system should dynamically plan, selects, and adapts workflows based on goal evaluation, feedback, and tool interaction. It should also reason about incomplete goals, decompose them, and discover tool sequences autonomously. It has to automatically and without human intervention run iterations when model/data/goal misalignments are detected. For example, in Petrel, a user scripts a facies modeling process. An agentic AI should decide whether facies modeling is even needed based on seismic uncertainty and intended development strategy.

Another difference is that in conventional simulation we have no integrated reasoning and the outcome of the predictive models rely on engineer’s intuition. For an Agentic AI system, it must present embedded planning, diagnostic reasoning, and self-evaluation, and move from “task execution” to “task explainer” and strategy optimization, by adjusting and reusing historical modeling decisions across subsets of wells, reservoir sections, or different fields. Petrel won’t explain why a history match fails. An agentic system should be capable of analyzing mismatch sources, reason over model components, and propose changes to flow units, assumptions, or reservoir parameters.

Three High-Impact Areas for Agentic AI in Upstream Oil & Gas

1. Dynamic Multi-Modal Data Fusion & Interpretation

One of the biggest challenges in reservoir engineering and asset management is that each technical discipline such as petrophysics, geostatistics, geology, or reservoir maintains its own data interpretation stream. Integration across domains, for example, linking porosity interpretations from well logs with acoustic impedance from seismic, is complex, iterative, and often manual. These interconnected data flows include core and log-derived porosity models feeding into geostatistics. Seismic inversion volumes used to guide facies distribution. Fluid sample data informing PVT models and simulation inputs. By integrating these blocks, you’d go from data to geostatistical analysis, to reservoir characterization model, to porous media fluid flow dynamics, to flow simulation, and eventually to economic evaluation of the asset.

The improvement expected from Agentic AI is that the system should act as an orchestrator agent that continuously monitors incoming data across domains such as new log runs, updated seismic reprocessing, production time-series, and updates intermediate models accordingly. This way, it can automatically reconcile petrophysical interpretations with seismic inversion outputs, use prior well models and rock property predictions to suggest missing measurements or flag inconsistencies in new core lab data. Such agents would have the memory and reasoning to connect static and dynamic data streams, without waiting for manual re-integration steps.

2. Iterative, Goal-Driven Model Building and Scenario Evaluation

Reservoir models are built iteratively, with increasing sophistication from basic to advanced models, as there are more wells drilled, more production data becomes available, and more money is spent by the operator. But in general, decisions on which modeling path to follow are heuristic and human-dependent. Sensitivity runs, model scaling, and scenario evaluations are time-consuming. Business drivers (ROI, feasibility) are often disconnected from technical modeling feedback loops.

An Agentic AI system should act as a modeling strategist agent, capable of planning modeling workflows based on quarterly business objectives and current data availability, and evaluating trade-offs between model fidelity, cost, and uncertainty, for example, deciding when to apply full seismic-guided geostatistical modeling. An Agentic AI system should be able to autonomously trigger simulation runs, adjust model parameters, and summarize economic outcomes. Such an agent could evaluate multiple seismic attribute correlation strategies and propose the most informative combinations for cokriging based on uncertainty, expected value, and computational cost.

3. Cross-Disciplinary Workflow Coordination and Knowledge Retention

Current practices, and cloud-based reservoir simulation packages have already improved team coordination but upstream expert teams still rely heavily on individual expert knowledge. Integration across sub-teams is communication-dependent, and there's no persistent system memory that captures decisions, justifications, and failed paths. Embedding an overarching Agentic AI within workflows, organizations can build persistent cognitive infrastructure where “team assistant agents” autonomously track workstream progress, detect bottlenecks and recommend next actions. Another set of “knowledge archive agents” store decline analysis outcomes, geomodels, and justifications from previous field studies, enabling reuse and transfer learning. For example, a team assistant agent could detect that seismic-derived porosity estimates diverge from log-based estimates and automatically surface prior case studies or recommend a model calibration step.

Last Word

This all won’t happen overnight though. Agentic AI adoption in upstream oil and gas will occur in phased stages over the next 5 to 10 years. In the near term (1 - 2 years), implementation will be limited to proof-of-concept (POC) projects within digital subsurface and innovation teams. Initial use cases will focus on workflow automation, such as seismic inversion orchestration, simulation loop management, and structured report generation, by interfacing with existing platforms like Petrel and Delfi through available APIs and scripting layers. Medium-term adoption (2 to 4 years) will see expansion across asset teams, where multi-agent systems coordinate domain-specific workflows like well log interpretation, seismic processing, reservoir modeling, and connect outputs to planning and economic evaluation processes. One factor that can significantly accelerate this would be integration with cloud-native data platforms, and the success of unified data schemes like Open Group’s OSDU. Long-term (5 to 10+ years), Agentic AI may support continuous model maintenance, real-time data assimilation, and scenario evaluation at the enterprise level. Adoption will be constrained by software interoperability, operational safety requirements, and the need for traceability in decision-making. Agentic AI will not replace established platforms but will gradually phase out repetitive and routine interpretation tasks. So if some petrophysicists or geostatistics experts are doing the same thing for the past several years, they’re most likely to be impacted by large scale implementation of Agentic AI systems. To stay relevant as long as possible, these experts must understand how subsurface data, models, and business goals connect. Then learn to design workflows that AI agents can execute. Think less like an analyst, more like an orchestrator. That’s how to stay in the loop.

Source:

Janele, P. T., T. J. Galvin, and M. J. Kisucky. "Integrated asset management: Work process and data flow models." SPE Asia Pacific Conference on Integrated Modelling for Asset Management. SPE, 1998.

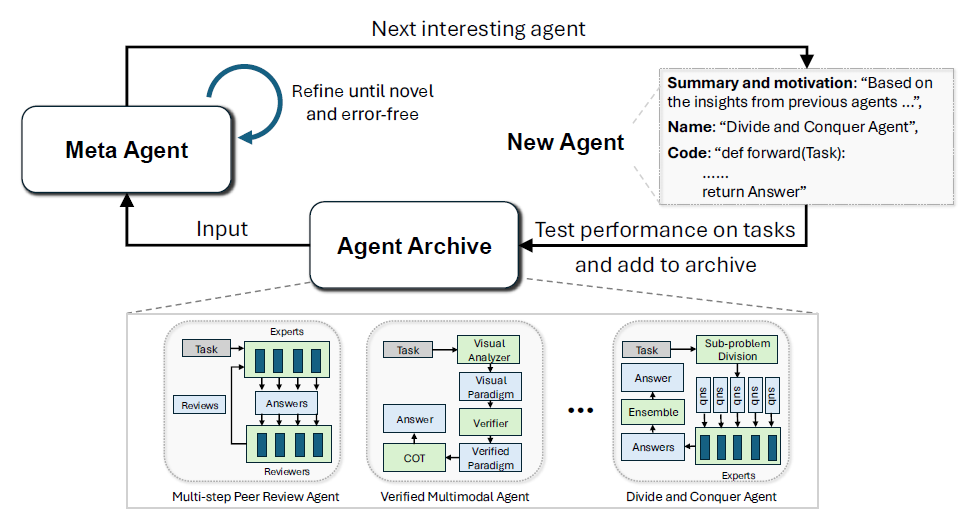

Hu, Shengran, Cong Lu, and Jeff Clune. "Automated design of agentic systems." arXiv preprint arXiv:2408.08435 (2024).